Character recognition

with neural network

in Java

Introduction

The neural networks are a well known mean used to make character recognition (even if it's not the best). The purpose of this page is to present a model of a neural network used in character recognition and its Java implementation.

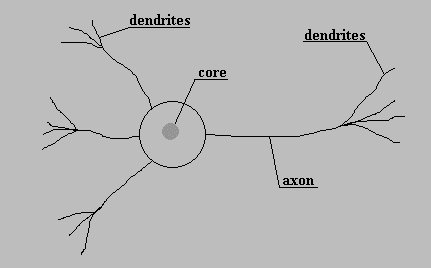

Model of a neuron

Even if nobody really knows how the human brain works, computer scientists have been looking for a way to approximate the extraordinary capacity of learning of the human brain. The picture right above shows the basic component of the human brain, the neuron. Its functionning principle is quiet simple: it make the sum of what it becomes on its entries (the dendrites) and if the entry level is high enough, it send a signal on its output (the axon).

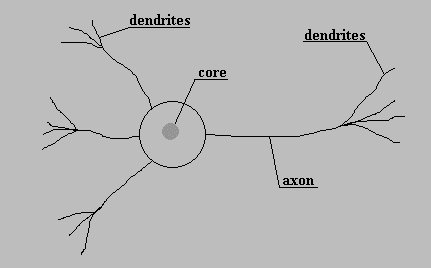

So, it was simple to imagine the computer equivalent of a human neuron:

The picture shows an idealized neuron. Such a device is very simple to implement, and the analogy with the human neuron is rather oubvious. In this idealized version, the activation function (How the neuron behaves in function of its entries) is a step function. Another popular kind of function is the sigmoid function 1/(1+exp(-x)) that has been used in the implementation of the applet.

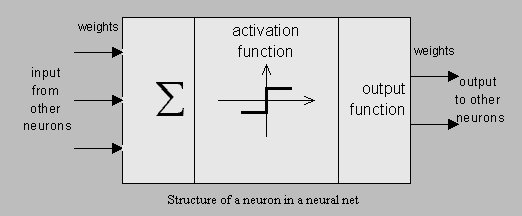

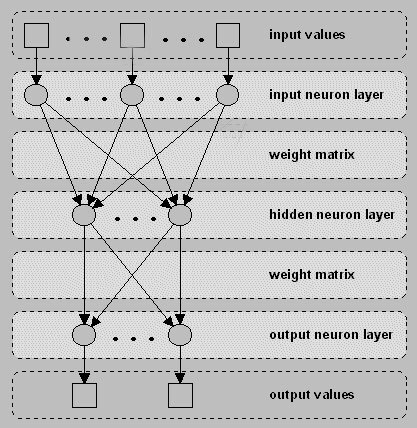

The backpropagation network

The architecture of the net is the one of a basical backpropagation network with only one hidden layer (although it is the same techniques with more layers). The input layer is constituted of 35 neuron (one per input pixel in the matrix, of course)., they are 8 hidden neurons, and 26 output neurons(one per letter). The weight matrix give the weight factor for each input of each neuron. These matrices are what we can call the memory of the neural network. The learning process is done by adjusting these weight so that for each given input the output is as near as possible of a wanted output (Here the full activation of the output neuron corresponding to the character to be recognized).

The learning process

We start with random value assigned to the input matrices, and we will gradually adjust the weight (i.e. train the network) by performing the following procedure for all pattern pairs:

Forward pass

1) Compute the hidden-layer neuron activation:

h=F(iW1+bias1)

where h is the vector of the hidden-layer neurons, i the vector

of input-layer neurons, and W1 the weight matrix beetween the

input and hidden layers. bias1 is the bias on the computed

activation of the hidden-layer neurons. F is the sigmoid

activation function (F(x)=1/(1+exp(-x) here)..

2)Compute the output-layer neuron activations:

o=F(hW2+bias2)

shere o represents the output layer, h the hidden layer, W2 the

matrix of synapse connecting the hidden and output layers, and

bias2 the on the computed activation of the output-layer neurons.

Backward pass

3) Compute the output-layer error (the difference between the

target and the observed output):

d=o(1-o)(o-t)

where d is the vector of errors for each output neuron, o the

output layer vector, and t is the target(correct) activation of

the ouput layer.

4)Compute the hidden layer error:

e=h(1-h)W2d

where e is the vector of errors for each hidden-layer neuron.

5)Adjust the weight for the second layer synapses:

W2=W2+CW2

where CW2 is a matrix representing the change in matrix W2. It is

computed as follow:

CW2t=rhd+OCW2t-1

where r is the learning rate and O the

momentum factor used to allow the previous weignt change to

influence the weight change in this time periode, t. This does

not mean that time is somehow incorporated to the model. It means

only that a weight adjustment may depend to some degree on the

previous weight adjustment made.

6)Adjust the weight for the first layer of

synapses:

W1=W1+CW1t

where CW1t=rie+OCW1t-1

7)Adjust the bias vectors:

bias1=bias1+rd

bias2=bias2+re

Repeat steps 1 to 7 on all pattern pairs until the output layer error (vector d) is within the specified tolerance for each pattern and each neuron.

The network has been trained using the presented character set with 5% added noise. The learning rate used was 0.1.

The applet

The applet window automatically pops up. If nothing pops up after a while, you can try to click the reload button of your browser. If you have nothing in the applet window, or in the case Bill Gate$ has put a bug in the java machine (no, there is NO bug in my programs. Never.) you can try to click the restart button of the applet window.

The blue rectangle in the top left corner represents the matrix of the inputs. By clicking in it, you can put input value of the corresponding neuron to 1 or 0. First, you can try with the 26 predifined characters for which the net has been trained. Do that first because if click in the rectangle, you will change the values in the matrix corresponding to the current caracter, on the only way to retrieve the predifined values is to reload the applet.

The center of the window shows the output values of the neurons of the output layer (i.e. those corresponding to the 26 letters), and their activation in percent. The output which has the highest value (i.e. closer to 1=100%) gives the recognized character.

The blue rectangle in the top right corner is a drawboard. Draw any character you want in, and click on the "Character recognition" button. The applet will generate the corresponding input matrix, and try to identify the corresponding character.